Machine Translation and Natural Language Processing

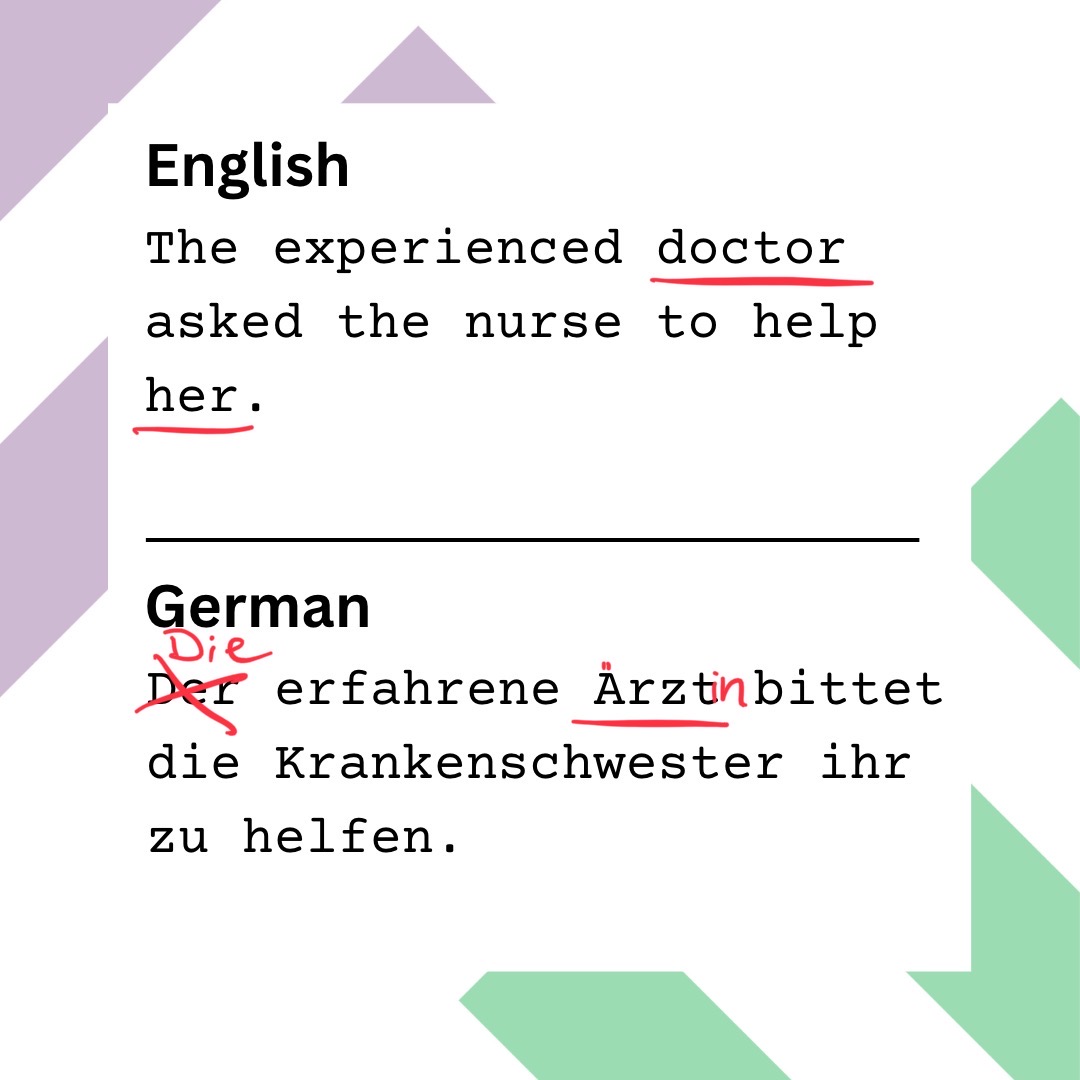

Reading through various studies on gender bias in machine translation, I stumble across the sentence: The doctor asked the nurse to help her. It’s used in a study that tests how gender is translated from English into languages which, unlike English, have grammatical gender. This attribution is particularly relevant when it comes to terms that label people. In English, for example, doctor is gender-neutral, whereas in German one would traditionally have to choose between ‘Arzt’ or ‘Ärztin’, the former a male doctor, the latter female. Intrigued, I open one of the most popular translation engines to see what happens when I translate this sentence into German. Contrary to my expectations, doctor is not translated as Ärztin, but Arzt, even though the pronoun her clearly indicates the doctor in question is female. This astonishes me a little and I continue to play around with the sentence. What happens if I add the descriptor beautiful in front of doctor? Not so surprisingly, doctor is now translated as Ärztin. If I change the adjective to experienced, the masculine form Arzt reappears. And here I am only moving within the binary gender order, the attempt to depict identities beyond the binary fails miserably. But why does this different gender assignment take place? How does a translation tool decide which words are translated when and how?

The underlying technology of translation machines is called Natural Language Processing (NLP), which combines branches of computer science, linguistics and artificial intelligence. NLP is a very complex process that requires enormous quantities of data. Importantly, this data was actually produced by humans, be it spoken words, newspaper articles or posts on social media. This collection of data is called a corpus, which is further processed by splitting the texts into individual sentences and their phrases on a structural level (tokenisation). In the next step, individual words are coded according to word types (part-of-speech tagging), but if words do not carry important content information, such as and and or, they are removed (stop word removal). The remaining words are now reduced to their root words (stemming). For example, the words likely, likes and liked, if the suffixes are removed, all belong to the root word like. These words are also shortened to their basic form, but the context is additionally taken into account, e.g. better belongs to the lemma good (lemmatisation). A lemma is an abstract unit, namely a word without syntactically relevant inflection.

These are only a few selected processes of NLP, and the challenges that go along with them are very different in every language. What is most fascinating, however, is the encoding of words at the level of meaning. Due to the large amounts of data that are processed, the program already learns which words are very likely to occur together in certain contexts and which are not (collocations). For example, in a sentence such as “She leaves the bank”, the program knows, based on the surrounding words, that leaves has to be the third person singular present of the verb leave, instead of the plural form of the noun meaning the leaves on a tree, which do not appear in such a context. Basically, the program forms an algorithm on the basis of linguistic patterns, through which texts are to be created that are as close as possible to the human use of language (natural language generation).

With algorithms, the danger always exists that an “algorithmic bias” arises, and NLP is no exception. This can in fact manifest while processing the training data. Bias entails a tendency to hold prejudices against a person or thing and, as a consequence, to disadvantage that person or thing. The tendency in translation engines to favour a certain vocabulary over others is evident from the fact that the lexical diversity of the corpus used to train a translation engine is significantly higher than the translations based on that corpus. Since the program recognises which words frequently occur together, it always falls back on the most commonly used collocations when producing translations and, as a result, stereotypes are picked up and reproduced. To return to the initial example, in which the translation engine selects the female form Ärztin in connection with the adjective beautiful, but the male form Arzt in connection with the adjective experienced, it is very likely that this collocation occurred frequently in the training data and this is what the algorithm has learnt from. This example not only shows the problems that an algorithm causes by not selecting the correct gender for the person portrayed, but also that the cause of this problem is a gender bias in our society, as women are more often described by their appearance, while men are more often defined by their actions.

But the problem does not end here. Algorithm bias goes hand in hand with „bias amplification“. Each of us holds stereotypes that are represented by our word choice. Accordingly, stereotypes are already present in the language data used for NLP. These are subsequently amplified by the bias of the algorithm. One study showed that in the training data around the word cooking, the cliché that cooking is associated with women was already represented. The word appeared 33 per cent less frequently with men than with women. After the program was trained with this corpus, this difference increased to 68 percent.

The fact that existing digital translation tools reproduce and reinforce the stereotypes of the corpora with which they have been trained and programmed is an ongoing problem that involves not only gender, but also racism, ableism and classism. Many researchers agree that this can only be solved through an interdisciplinary approach. The aim is to understand not only how bias is created and reinforced on the technical side, but also what sociological factors are involved in its creation and how these are reflected in our everyday language use.

The fact that translations need a lot of sensitivity in order not to reproduce stereotypes was one of the basic considerations when our project macht.sprache. was launched. macht.sprache. offers support in dealing with politically sensitive terms in English and German in form of a database that is created and developed collaboratively by all users. This collaborative knowledge feeds the Text Checker and the extensions for DeepL and Google Translate, which support Chrome and Firefox. The tools highlight potentially sensitive terms and give advice, empowering users to make sensitive decisions. But macht.sprache. can also simply serve as a source of information to learn more about language discrimination. You can register here to participate.