Discrimination, language, and translation tools

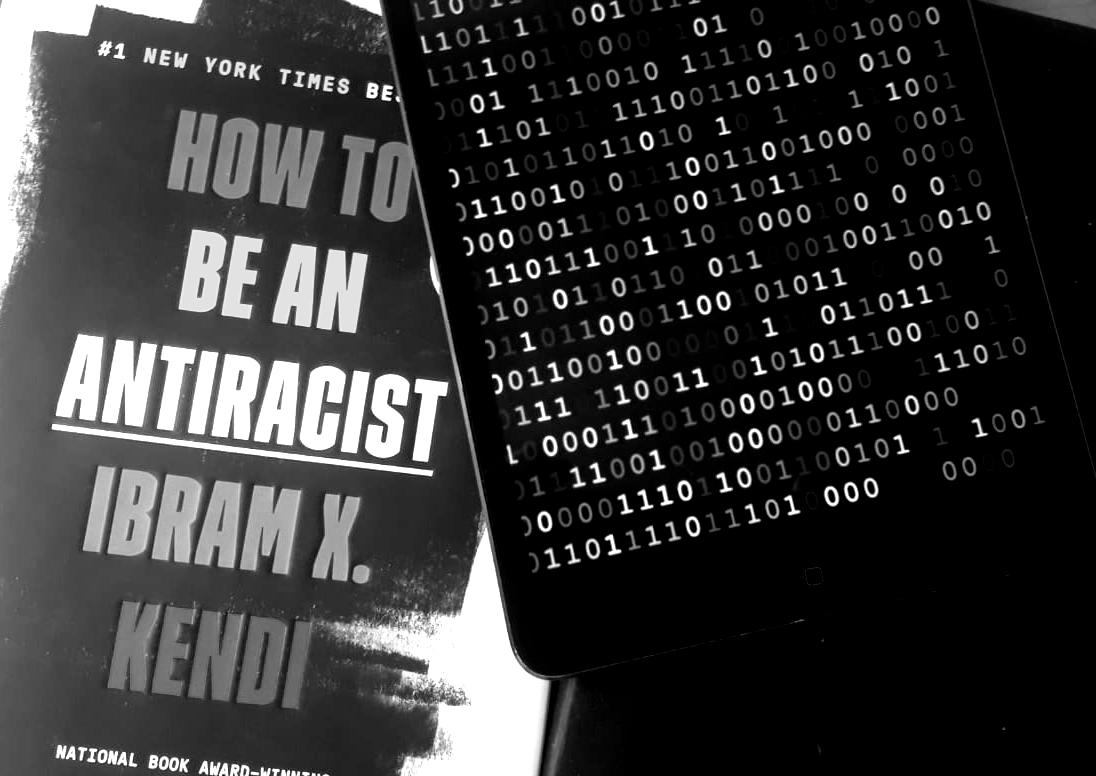

As part of our case.sensitive. project, we consider some of the issues surrounding translation and sensitive language. case.sensitive. is developing an app to foster politically sensitive translation between English and German. It aims to develop the discussion culture around discriminatory language and increase awareness for problems surrounding politically loaded terminology and (machine) translation. Here, we consider technology and implicit bias.

Many people who find their cultural activities increasingly moving into the realm of the virtual might find themselves working more and more with translation tools. There’s no doubt that these tools, many of which are available for free online, have done a lot to lighten the workload for those of us who communicate and publish bilingually. Sometimes, such tools allow translated versions to come into existence where this otherwise might not have been an option, which in and of itself can be a wonderful thing. At the same time, these tools, and the translations they offer, tend to suffer from biases embedded into their making.

For example, in machine translation, translating “He is a nurse. She is a doctor” to Hungarian and then back to English is likely to make its return into the original language in somewhat altered state, as “She is a nurse. He is a doctor.” This example makes manifest the gendered associations that in any case coalesce around these different medical professions. Such gender bias is written into a word like Krankenschwester (which literally translates from the German as sister to the sick), which is likely the first suggestion a translation tool will give for translating ‘nurse’. Here it becomes clear how the biased gendered dimensions of ‘nurse’ and ‘doctor’, which very much exist but are latent in the English language, are exacerbated in uncareful translation.

As is often the case, initial discrimination reproduces itself and begets further discrimination. There are some projects out there expressly trying to work against the kind of bias perpetuation we see in machine translation, as well as in other language-related technology, such as speech recognition. Examples include Artifically Correct, an upcoming project of the Goethe Institute (more about this soon), and Lahjoita puhetta, which is a Finnish project explicitly trying to gather recordings of a more diverse array of voices on which to train voice recognition technology, in order to remedy an over-representation of white, middle-class voices. Since technology trained on these voices is more likely to recognise commands given by similar voices and the ways of speaking most common to them, they implicitly participate in producing the ways of speaking associated with this group as a norm, and other ways as deviating from that norm.

Another clear instance of the tendency to such silencing is crystallised by detection systems designed to flag social media posts likely to be offensive. Such systems have been found to be more likely to score tweets entailing linguistic characteristics associated with African American English as more offensive than those without (find the article here). This implicitly insinuates African American English as deviant, and increases the likelihood that tweets with related linguistic markers might be deleted – with the entirely possible consequence of exacerbating the already-existing underrepresentation of African American voices in public spaces.

The tendencies suggested by these examples, if unchecked, are problematic in a number of ways, as they reinforce existing gender biases, and silencing as they do precisely those voices that have already been historically, institutionally marginalised , notably those belonging to BIPOC communities. Though all this talk of the problems that can emerge in and around bias in technology is not to say that there aren’t fun and creative ways to use it too – as suggested by this poetry project. What it should make clear is that for cultural workers operating bilingually, feeding a text into existing translation tools can be a first, but by no means the only, step in producing a politically sensitive translation.